In this post, I will cover how you can save the output from an Azure Data Factory (ADF) or Azure Synapse Pipeline Web Activity as a Blob file in an Azure Storage Account. You could use the same method to save the output from other activities.

I find I use this method every now and then, predominantly as a method of easily passing data returned from a REST API between a parent Pipeline and a Data Flow. Or between different Pipelines without the need for anything like a database. I can just save the data as a JSON blob file in a Storage Account to be picked up elsewhere.

Storage Account

First, you will need a Storage Account, if you don’t already have created that a look at this Microsoft guide https://docs.microsoft.com/en-us/azure/storage/common/storage-account-create?tabs=azure-portal

Blob Container

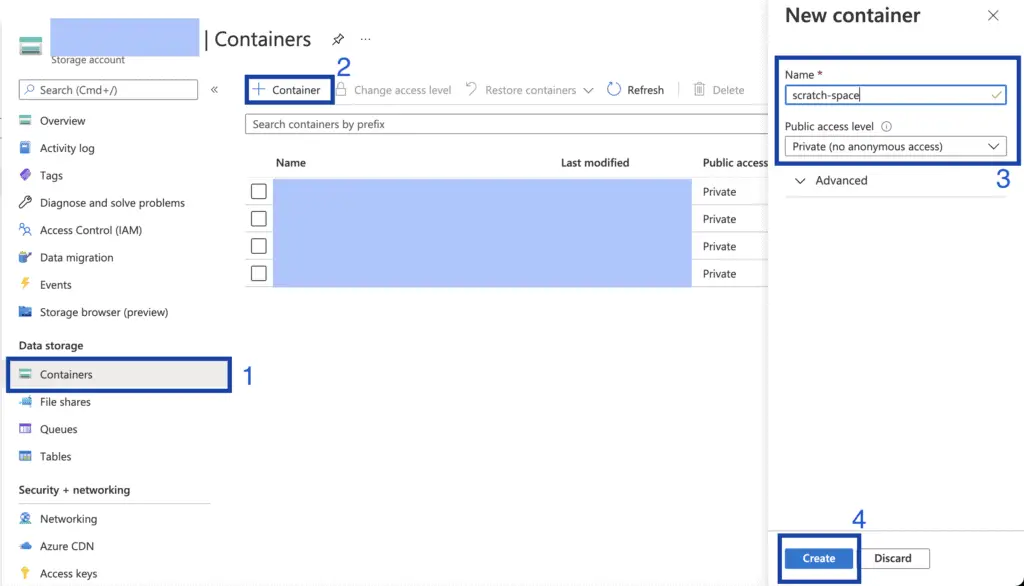

If you already have a container in your Storage Account you would like to use you can skip this step.

Within your chosen Storage Account create a Container to store your blob file(s).

- Within you Storage Account

- Click Containers under Data Storage

- Click the option to add a new container

- Give your container a name, make a note we will need this later

- Setting the access level as private is fine.

- Click Create

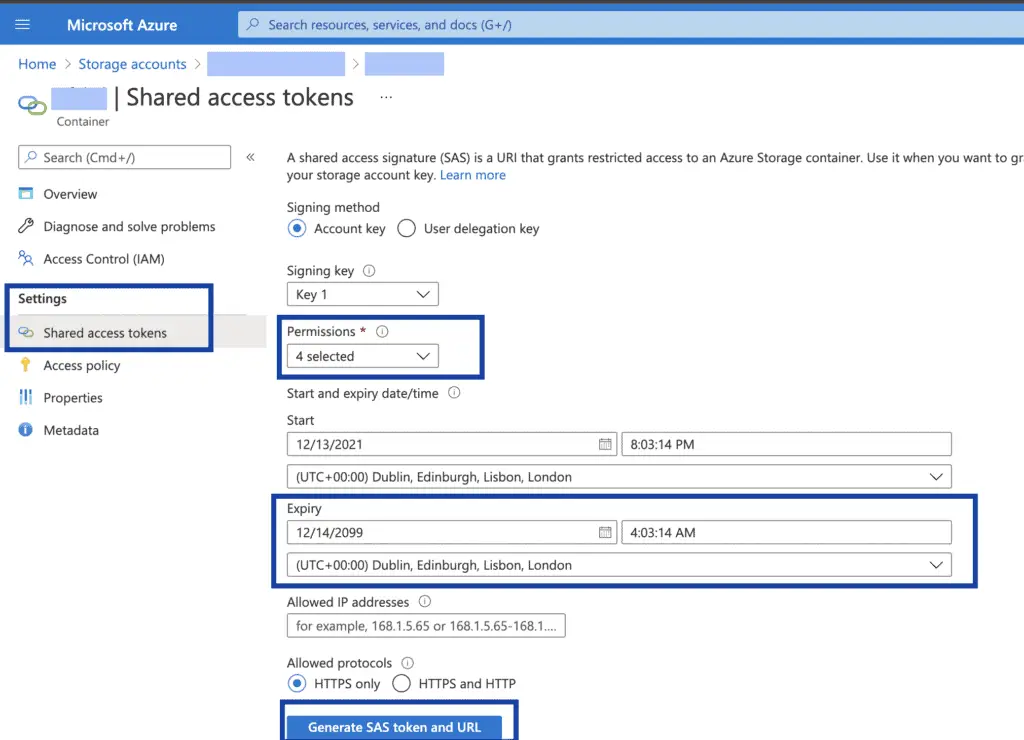

Shared Access Token

To allow Azure Data Factory to have write permission to your chosen container in your Storage Account you will need to create a shared access token.

- Within you Storage Account

- Click Containers under Data Storage

- Click your container

- Click Shared access tokens under Settings

- Grant at least the permissions Read, Add, Create and Write.

- Set an appropriate expiry date.

- Click Generate SAS token and URL

- Take a copy the Blob SAS URL at the bottom

Saving to Blob

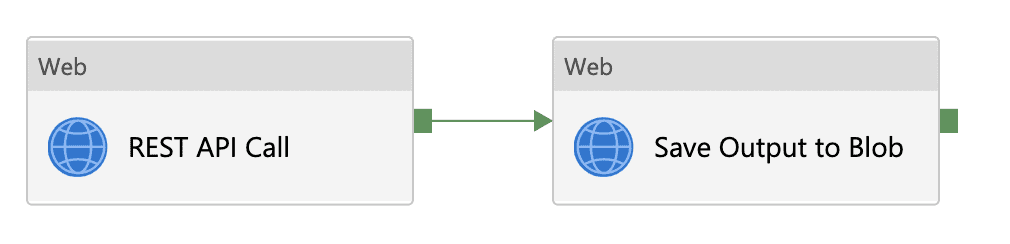

In this example, I have a Web Activity that is performing a REST API call and I want to save the output from this activity to a blob.

Saving to a blob can be done with another Web activity, so you end up with a layout like the below. You have a Web activity collecting your data and a second that will pick up the output from the first and perform an HTTP PUT to blob storage

I am only going to cover how to set up the “Save Output to Blob” web activity in this example.

Here is how it is done;

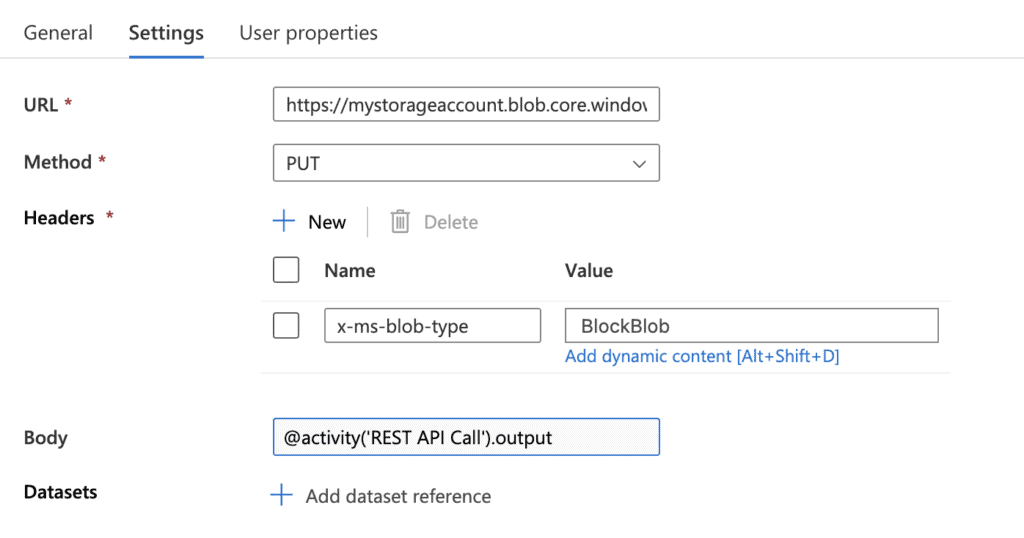

- Create a new Web activity, name it as required “Save Output to Blob” in my example and link it to your source activity (as above).

- Open the properties for your newly created Web Activity and select the Settings tab

- Set the URL to be to be your previously copied Blob SAS URL. Adding in the desired blob file name after the container name in the URL.

For example:

If your URL ware;

https://mystorageaccount.blob.core.windows.net/mycontainer?sp=racwdli&st=2021-11-28T23:08:09Z&se=2099-11-29T07:08:09Z&spr=https&sv=2020-08-04&sr=c&sig=xyz

You would amend it to be;

https://mystorageaccount.blob.core.windows.net/mycontainer/blobfilename.json?sp=racwdli&st=2021-11-28T23:08:09Z&se=2099-11-29T07:08:09Z&spr=https&sv=2020-08-04&sr=c&sig=xyz

- Set the Method to be PUT

- Add a header named “x-ms-blob-type” with a value of “BlockBlob“

- Set the Body to by a dynamic contact with the value @activity(‘REST API Call’).output replacing “REST API Call” with the name of you source activity

You may like my other content

- Azure Data Factory – Get and store a Key Vault secret as a variable

- Azure Data Factory – How to Truncate a Table

- Azure Data Factory – Getting start or end of week dates

- Azure Data Factory – How to Save Web Activity to Blob Storage

- Azure Data Factory – “Does not have secrets list permission on key vault”

This was helpful. Thanks for sharing.

its working perfectly

Glad it helped!

EXCELLENT.IT WORKED.GOOD PIECE OF INFORMATION.THANKS

Hi, I used the same process. But after some time it got failed with error

the payload including configurations on activity/dataSet/linked service is too large. Please check if you have settings with very large value and try to reduce its size.

Have anyone got error like this.

Thanks!

Can you please create one with keys vault

Good method, but can we utilize linked service instead of SAS token in your scenario ?